Tech Column: Music technology glossary

David Guinane

Tuesday, March 1, 2022

Do you ever find yourself skimming over the seemingly evasive terminology that peppers these tech pages, or other music education resources? David Guinane provides a handy glossary for your reference whenever you need it.

Tanyajoy/AdobeStock

The number of acronyms and technical terms in the realm of ‘music technology’ can feel overwhelming, and often alienating. Do not be intimidated! Above all, musicianship and musicality are the most valued attributes of anyone using technology. Having said that, a working knowledge of a few common terms has its uses, so a few essentials are explained below:

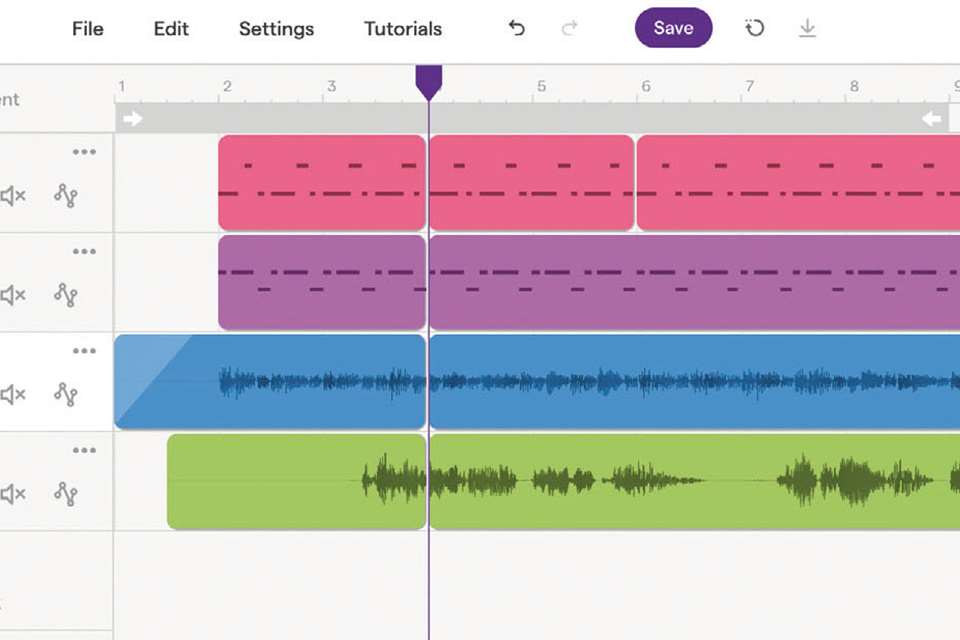

DAW

DAW is an acronym that means ‘digital audio workstation’. It is sometimes spelt out when spoken (dee, ay, double you), or pronounced like ‘door’ (which sounds silly and can be confusing, especially if you are explaining something and you are standing by an actual door). It can refer to any software used for sequencing and creating music; whether recorded or synthesised. GarageBand, Logic, Soundtrap and Cubase are examples of popular DAWs.

MIDI

Another acronym (musical instrument digital interface), this is pronounced as a word (like the French for ‘midday’). MIDI is complicated, so just remember a ‘MIDI track’ is one that can be easily edited in a DAW. You can change the pitch, length, sound and shape of MIDI data after it has been recorded or programmed. Pure audio (such as a singer or live instrument recording) is not as malleable after the fact.

Sample

A sample is any pre-existing piece of audio that can be imported into a project and used as part of a track. The recorded ‘loops’ that come with GarageBand are samples, as is the hook from Bootylicious by Destiny's Child (it originally comes from the track Edge of Seventeen by Stevie Nicks). Finding, editing, and reusing samples is a key part of much electronically produced music.

Plugins

Often confusing to the uninitiated, a plugin is anything you can ‘plug in’ to a DAW to increase its functionality. A plugin could be a specific synthesiser, or a specific reverb effect. You can think of them as add-ons to a DAW. Don't panic if you see the acronym VST (virtual studio technology) before the word ‘plugin’ – this is a common standard.

EQ

EQ, or equalisation, is a versatile tool that is used to make your music sound better (in a nutshell). With EQ, you can boost (turn up) or cut (turn down) various frequencies in a track or project. It can be used to improve the overall quality of the ‘mix’, or to isolate and remove certain unwanted frequencies.

Controller

A controller is a device which sends ‘musical’ information to the computer, often using MIDI. MIDI controllers often look like a (musical) keyboard, and send information such as frequency (pitch), duration, or velocity (dynamics), to a DAW. They can be used to ‘trigger’ (start) certain events in live performance, such as beginning/ending a loop, or adding/changing an effect. They don't always look like keyboards; you may see drum pads, a guitar controller, or even a wind controller (that you blow into) used to send data to your computer.

Compression

Compression, along with reverb, is probably one of the most used effects in a DAW. Simply put, compression makes the loudest bits quieter, and the quietest bits louder (it ‘compresses’ the extremes). When done correctly, this usually produces a more pleasant listening experience.

Latency

Generally speaking, if something isn't working, just slowly stroke your chin, furrow your brow and mutter ‘hmm, latency’ a few times. It looks impressive and buys you time to internally panic. Latency is the delay between inputting a signal (such as playing a key on a controller), the processing of the signal in the DAW, and the playback of that signal. Poor latency can cause problems, like out of time recordings, or audio effects that don't work as intended. The most common solution is to buy more expensive equipment.

Envelope (and the confusing but related acronym ADSR)

In music technology, envelope describes the ‘shape’ of a sound. For example, hitting a piano key will create an immediate, loud ‘start’ of the sound (attack), followed by a reduction in volume (decay). This quieter sound will continue for a time (sustain), before fading to nothing (release). The acronym ADSR is used to describe these four stages in a sound's envelope. As well as describing sounds, playing with envelope parameters is a vital part of synthesised sound.

Quantising/Quantisation

Most teachers benefit from a working knowledge of this tool. When working with MIDI tracks, quantising can be used to ‘make music sound in time’. It does this by ‘snapping’ each note to a predetermined point in the bar, depending on the settings. For example, 1/4 quantising will snap each note to the nearest quarter note, or crotchet, or 4th of a bar (it makes sense, trust me). A general rule of thumb is to quantise to the shortest note value in a phrase (so if semi-quavers are used, try 1/16 quantisation). Be aware that this doesn't fix really out of time music, and it can remove some of the organic, musical qualities of a track.

Google is your friend if you come up against an unfamiliar term. Most of the time, there is a logic behind the name. Remember, above all else, music technology requires considered listening with a musical ear much more than a memorised list of terms.